ECLIPSE: Efficient Long-range Video Retrieval using Sight and Sound

Yan-Bo Lin Jie Lei Mohit Bansal Gedas Bertasius

UNC Chapel Hill

Abstract

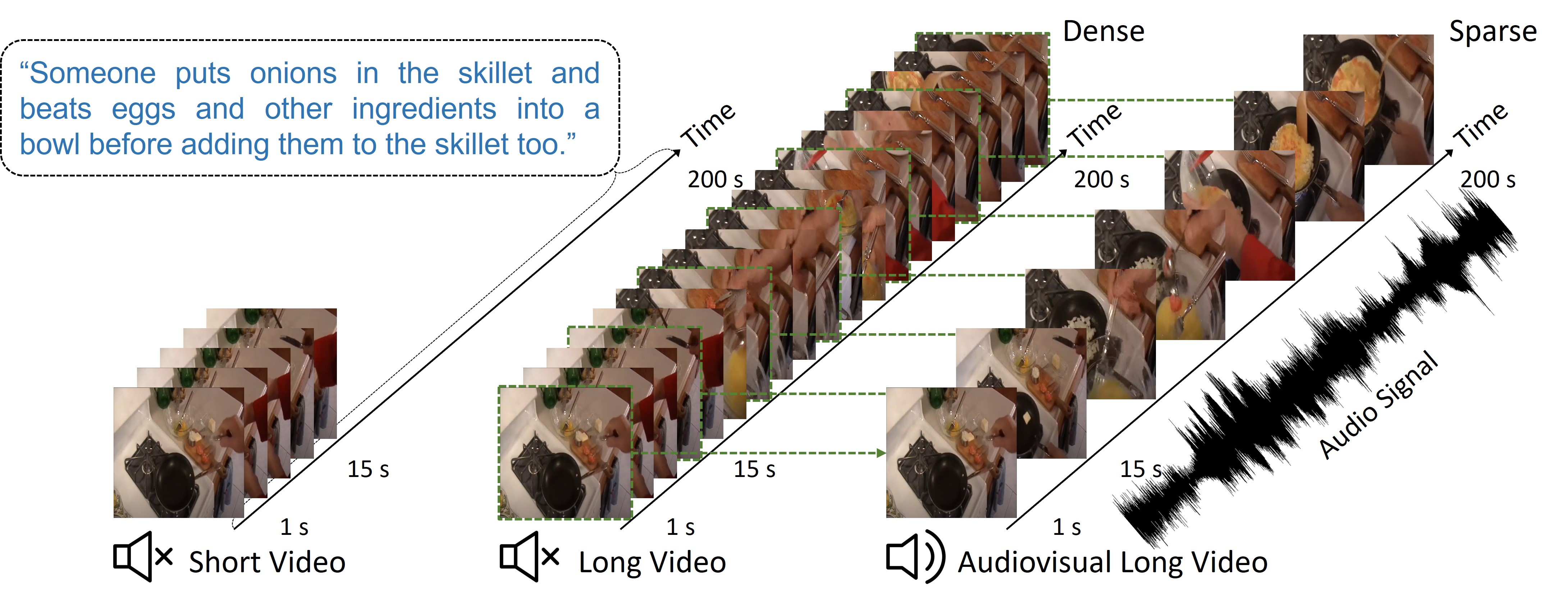

We introduce an audiovisual method for long-range text-to-video retrieval. Unlike previous approaches designed for short video retrieval (e.g., 5-15 seconds in duration), our approach aims to retrieve minute-long videos that capture complex human actions. One challenge of standard video-only approaches is the large computational cost associated with processing hundreds or even thousands of densely extracted frames from such long videos. To address this issue, we propose to replace parts of the video with compact audio cues that succinctly summarize dynamic audio events and are cheap to process. Our method, named ECLIPSE (Efficient CLIP with Sound Encoding), adapts the popular CLIP model to an audiovisual video setting, by adding a unified audiovisual transformer block that captures complementary cues from the video and audio streams. In addition to being 2.92× faster and 2.34× memory-efficient than long-range video-only approaches, our method also achieves better text-to-video retrieval accuracy on several diverse long-range video datasets such as ActivityNet, QVHighlights, YouCook2, and DiDeMo.

Demo Video

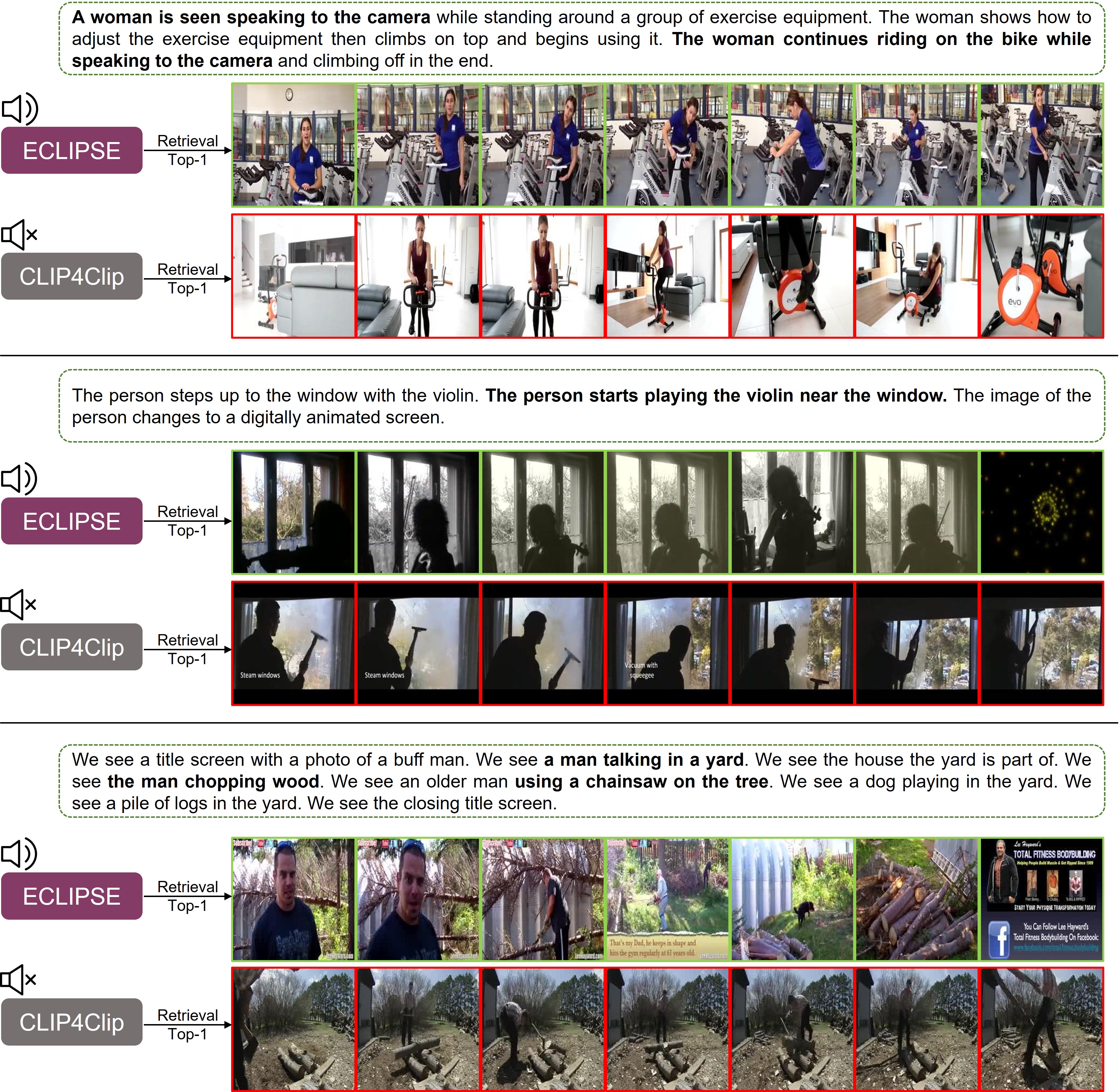

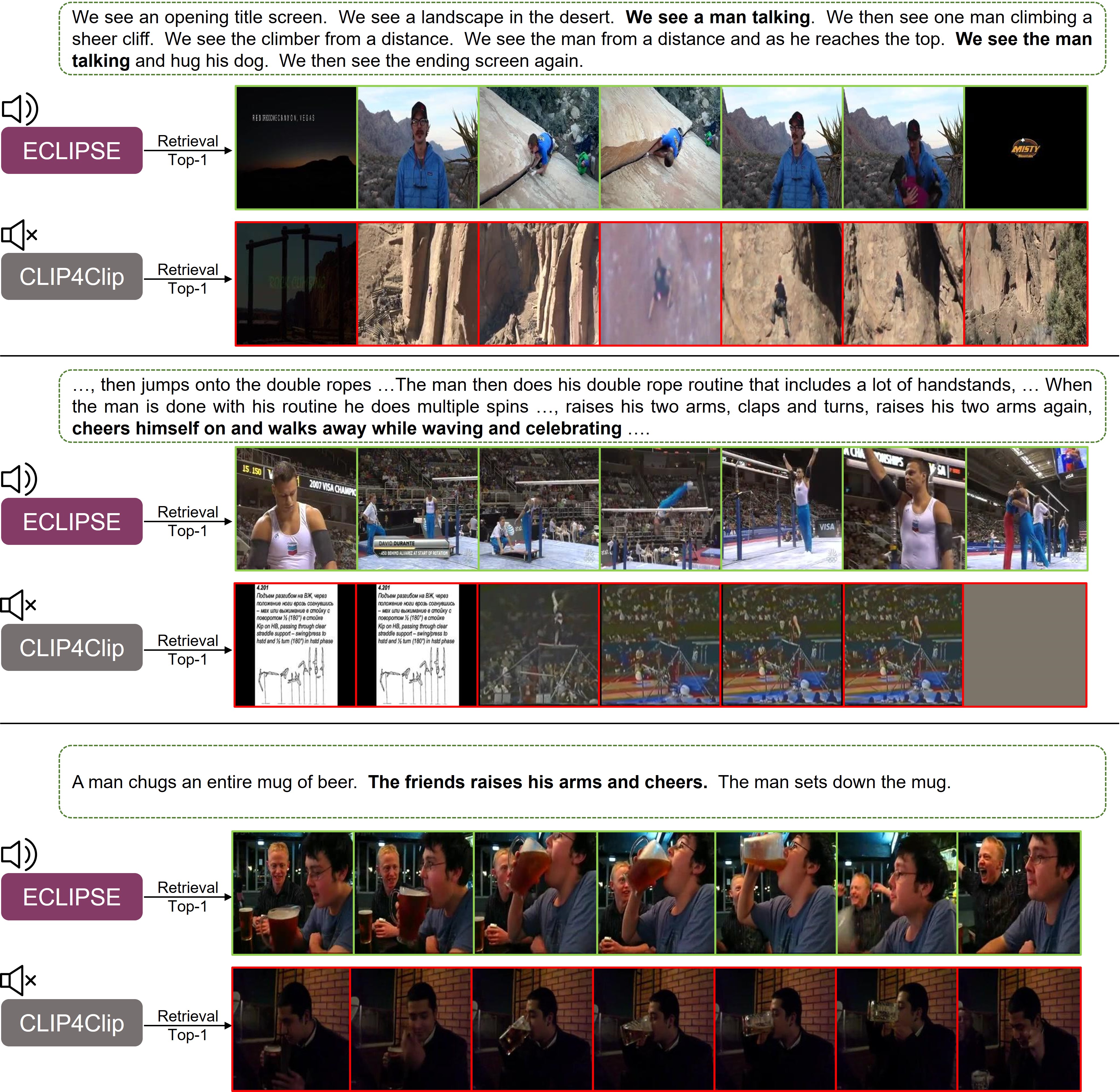

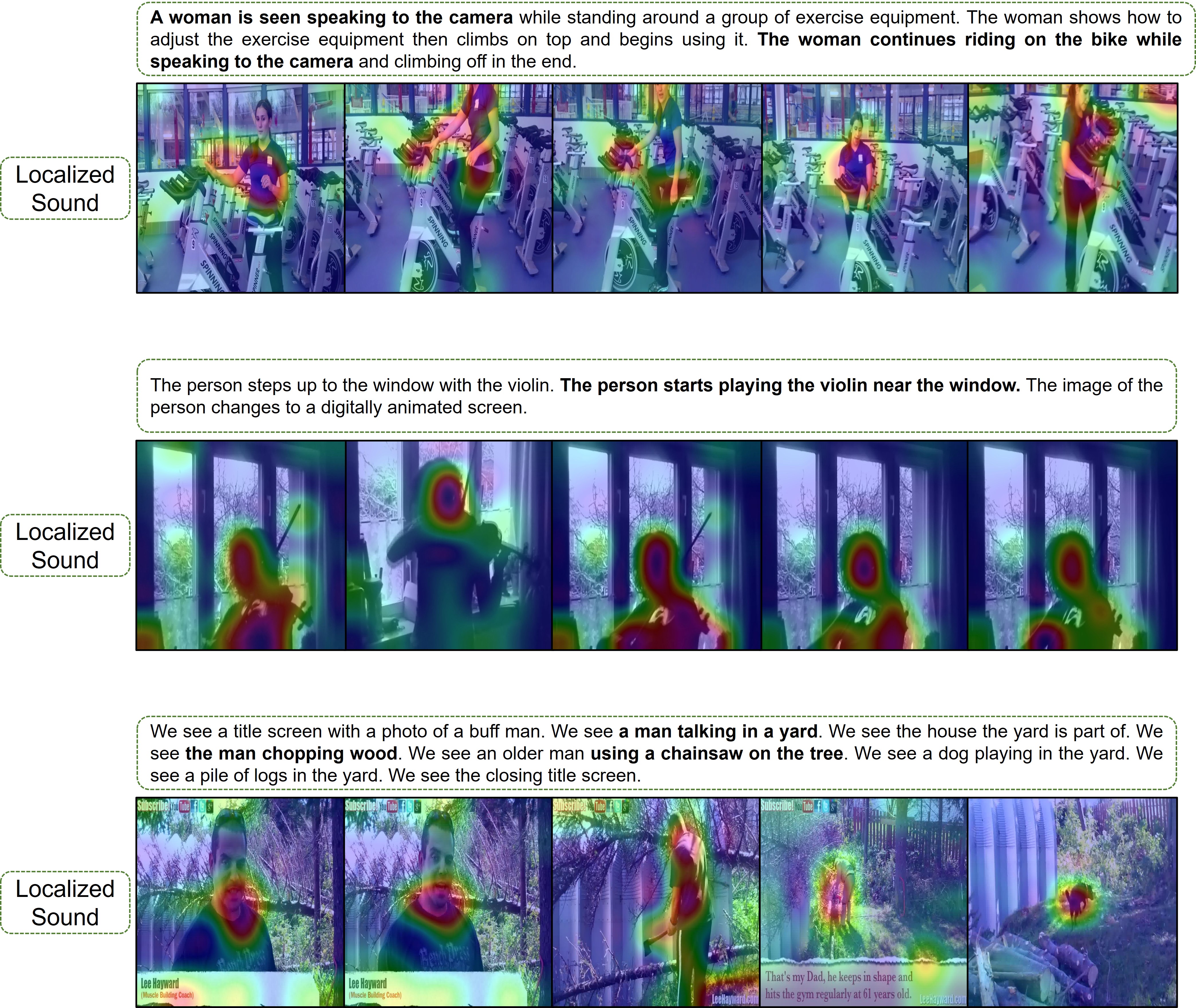

Qualitative Results

BibTeX

@InProceedings{ECLIPSE_ECCV22,

author = {Yan-Bo Lin and Jie Lei and Mohit Bansal and Gedas Bertasius},

title = {ECLIPSE: Efficient Long-range Video Retrieval using Sight and Sound},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

month = {October},

year = {2022}

}